Open Access | Interview

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Navigating the future of robotic surgery: a conversation with Prof. Enrico Checcucci on 3D and AR integration

* Corresponding author: Abdel Rahman Jaber

Mailing address: Adventhealth Global Robotics Institute, 380 Celebration Place, Orlando, FL, 34747, USA.

Email: asjaber@hotmail.com

Received: 30 November 2024 / Accepted: 02 December 2024 / Published: 26 March 2024

DOI: 10.31491/UTJ.2025.03.034

Abstract

The article is an interview with Prof. Enrico Checcucci, Department of Urology, San Luigi Gonzaga Hospital, University of Turin, Orbassano, Turin, Italy, conducted by Abdel Rahman Jaber, Adventhealth Global Robotics Institute, 380 Celebration Place, Orlando, FL, 34747, USA, on behalf of Uro-Technology Journal. This interview explores the transformative role of 3D and augmented reality technologies in robotic surgery, emphasizing their applications, challenges, and future potential.

Prof. Enrico Checcucci

Prof. Enrico Checcucci is a consultant urologist at the IRCCS Candiolo

Cancer Institute. He is President of ESRU, full member of YAU Urotechnology

Group, board member of YUO and associate member of GO of EAU. His research

has focused on prostate MRI, targeted biopsy, and 3D model-guided surgery

with augmented reality and artificial intelligence guidance. Dr. Checcucci

has authored or co-authored over 400 scientific publications, both full

papers and abstracts presented at national and international conferences.

(

https://www.researchgate.net/profile/Enrico-Checcucci

)

Abdel Rahman Jaber:

What are some of the more common applications of 3D and AR

technologies in robotic urology surgery today, and are there

certain case scenarios where they are most impactful?

Enrico Checcucci:

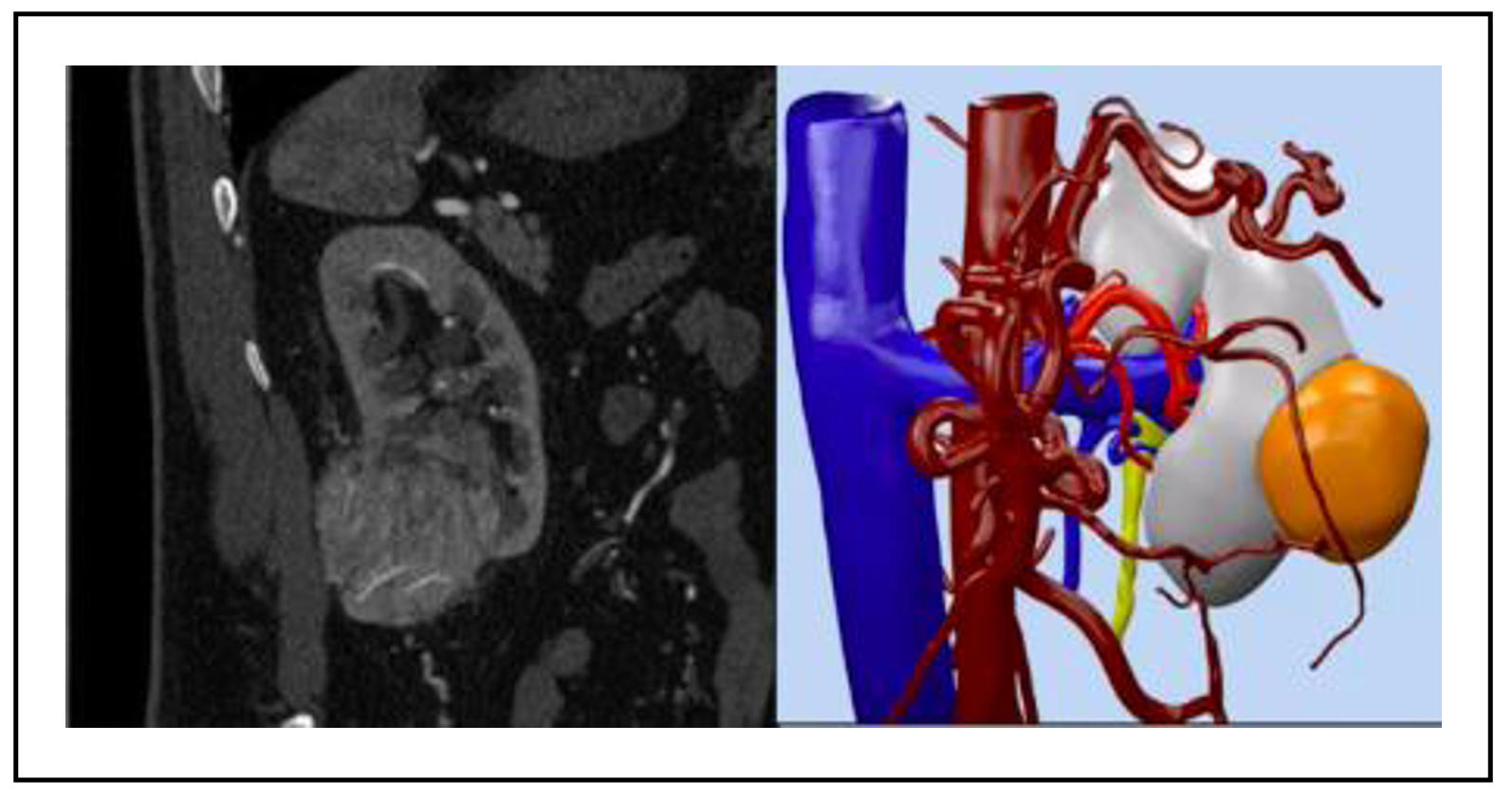

In recent years, 3D model reconstruction has gained

significant traction in urology due to its ability to represent

a patient’s precise anatomy in three dimensions (Figure 1).

These models are created from conventional 2D imaging such as

CT scans or MRIs [1]. Initially, they were primarily used for

surgical planning, aiding surgeons in strategizing their procedures.

Recently, advancements in engineering-physician collaboration have

introduced augmented reality (AR) applications in surgery. By overlaying 3D

virtual images onto real anatomy in real time, AR acts as a navigator during

operations. However, while promising, AR technology is still experimental and

faces challenges in dynamic, accurate overlaying [2, 3].

Figure 1. 3D virtual reconstruction of kidney and tumor anatomy.

Abdel Rahman Jaber:

Do you feel that the availability of these enhanced visual

feedback systems with 3D imaging and augmented reality have

improved clinical metrics on surgical precision, complications,

and patient outcomes compared with traditional robotic systems?

Can you provide examples?

Enrico Checcucci:

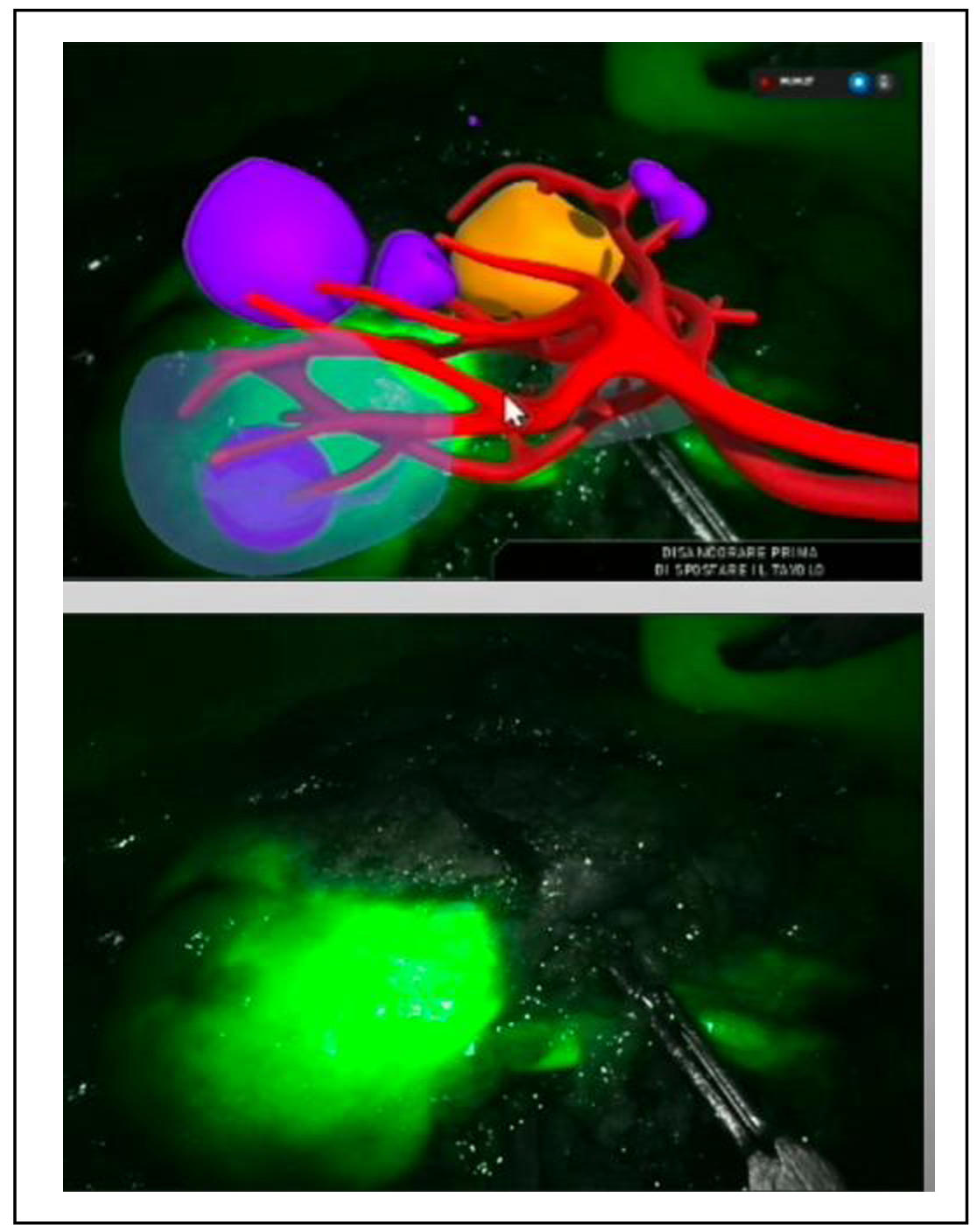

Although AR guidance is still under development, significant

advancements have been made. For instance, a recent systematic

review and meta-analysis evaluated 3D and AR guidance in partial

nephrectomy [4] (Figure 2). The findings revealed that 3D technology

significantly reduced global ischemia rates, enabled more frequent

tumor enucleation, reduced the opening of the collecting system, and

minimized blood loss. Additionally, 3D guidance was associated with a

notable reduction in transfusion rates without compromising surgical

margins or increasing complications.

For radical prostatectomy, a narrative review highlighted

improvements in preoperative planning, intraoperative navigation,

and real-time decision-making facilitated by 3D imaging and AR guidance [5].

Figure 2. 3D AR guidance during robotic partial nephrectomy.

Abdel Rahman Jaber:

How does the integration of 3D and AR technologies with presently used

robotic surgery platforms like the da Vinci system work? Which ones are

the current challenges to obtaining real-time implementation?

Enrico Checcucci:

Preliminary AR-guided experiences have been reported for robotic partial

nephrectomy and radical prostatectomy. These systems typically use hardware

and software customized for AR applications. For example, 3D models can be

integrated into the da Vinci surgical console using TilePro software.

The AR imagery is displayed in the console’s lower section, where a surgeon’s

assistant manually adjusts the alignment in real time to facilitate image-guided

surgery.

Challenges to achieving fully automated AR overlay fall into two main areas.

First is tissue deformation caused by traction during surgery, which complicates

real-time model alignment. Second is the accurate and automatic anchoring of

virtual images to real anatomy, a task hindered by factors like color similarity

in the surgical field, robotic arm interference, and patient-to-patient anatomical

variability. Addressing these computational hurdles remains a priority.

Abdel Rahman Jaber:

How do 3D and AR technologies help the training process for new surgeons?

Do seasoned surgeons have to go through a learning curve with these tools

until they become part of their routine practice?

Enrico Checcucci:

For novice surgeons, 3D models provide an invaluable tool for understanding

surgical anatomy. They allow trainees to visualize and mentally prepare for

the procedures they will encounter in live surgeries, thus reducing unexpected

events and improving procedural confidence.

For experienced surgeons, a learning curve exists in mastering this technology

to understand which information’s given by the models can be effectively useful

for the surgery.

Furthermore, surgeons and their assistants require dedicated training to

understand the 3D reconstruction process and effectively navigate AR systems.

At the University of Turin, we are developing specialized courses to address

this need and integrate these technologies into routine practice.

Abdel Rahman Jaber:

What do you consider the next big jump in 3D and augmented reality technology?

Do you have any projects in an experimental stage or any breakthroughs currently

in development that you find particularly exciting?

Enrico Checcucci:

Future advancements should focus on two key areas. First, robust scientific

validation through high-quality studies like randomized controlled trials (RCTs)

is essential. At our institution, we are nearing completion of an ongoing trial

comparing 3D AR-guided robotic prostatectomy with the standard approach (ISRCTN15750887)

with promising data in terms of reduction of positive surgical margins.

Second, technological innovations, such as the development of elastic/deformable

3D models and automated AR overlay systems, are critical for widespread adoption.

These breakthroughs would enable seamless integration into surgical workflows,

making this technology more accessible and impactful.

Abdel Rahman Jaber:

What do you consider the biggest challenges to significant market

penetration of 3D and AR in robotic urological surgery-cost, technical

limitations, or resistance from healthcare professionals?

Enrico Checcucci:

The cost of creating 3D virtual models has become relatively accessible,

allowing for broader adoption. However, AR technology still faces significant

technological and legal barriers, particularly in terms of software patents

and regulatory approval.

Cost-benefit analyses are also necessary to determine if these technologies

lead to overall savings. For instance, tailored surgeries that reduce complications

and preserve kidney function could potentially offset the initial investment in 3D

and AR systems by decreasing long-term healthcare expenses.

Abdel Rahman Jaber:

What are some of the ethical or practical issues in using augmented reality

in surgical practice, related to data privacy, dependency on technology,

consent by patients, or regulatory oversight?

Enrico Checcucci:

Ethical and practical concerns with AR stem largely from software authorization

and patent restrictions. However, the creation of 3D models—when done by certified

companies—meets CE marking standards and is considered a medical device.

A more complex issue arises if these models are shared via 5G in a virtual reality

environment or the metaverse. In such cases, data security, privacy, and ownership

must be rigorously managed to ensure patient safety and regulatory compliance [6].

Abdel Rahman Jaber:

How does AI complement 3D and AR technologies, and what potential synergies would you

see in these innovations driving the future of robotic surgery?

Enrico Checcucci:

Artificial intelligence represents the natural evolution of 3D and AR technologies.

AI can significantly accelerate and enhance the precision of 3D model reconstruction

process [7]. If initially it is totally performed manually by engineers and urologists

together, nowadays, hybrid software allows to automatically refine the models or to

perform an automatic segmentation of the 2D images.

Moreover, convolutional neural networks could facilitate automatic AR overlay alignment,

overcoming current computational challenges and enabling real-time image guidance [8].

Abdel Rahman Jaber:

Besides ongoing applications, how do you foresee immersive technologies, such as the

metaverse, contributing to personalized surgical care or educational platforms in the

near future?

Enrico Checcucci:

The metaverse will enter even more deeply in our clinical saily practice.

In this virtual environment is possible to share patients’ data and information

in distance, allowing to perform multidisciplinary team discussion among expert

and patients in distance. Furthermore, evaluating the application of 3D technology,

the 3D models can be enjoined into the metaverse, the surgical planning and strategy

can be discussed among experts, increasing the quality of our surgery.

Immersive technologies like the metaverse are poised to play an increasingly

significant role in clinical practice. Within this virtual environment, patient

data can be securely shared across long distances, enabling seamless remote

multidisciplinary team discussions. Additionally, 3D models can be integrated

into the metaverse for collaborative surgical planning. Expert surgeons can

contribute to preoperative discussions, offering advice and practical insights,

particularly for complex procedures, with the goal of enhancing precision and

procedural outcomes. These advancements hold immense potential to revolutionize

both personalized surgical care and advanced educational platforms.

References

1. Checcucci E, Piana A, Volpi G, Quarà A, De Cillis S, Piramide F, et al. Visual extended reality tools in image-guided surgery in urology: a systematic review. Eur J Nucl Med Mol Imaging, 2024, 51(10): 3109-3134. [Crossref]

2. Amparore D, Piramide F, De Cillis S, Verri P, Piana A, Pecoraro A, et al. Robotic partial nephrectomy in 3D virtual reconstructions era: is the paradigm changed? World J Urol, 2022, 40(3): 659-670. [Crossref]

3. Rodler S, Kidess MA, Westhofen T, Kowalewski KF, Belenchon IR, Taratkin M, et al. A systematic review of new imaging technologies for robotic prostatectomy: from molecular imaging to augmented reality. J Clin Med, 2023, 12(16): 5425-5433. [Crossref]

4. Piramide F, Kowalewski KF, Cacciamani G, Rivero Belenchon I, Taratkin M, Carbonara U, et al. Three-dimensional model-assisted minimally invasive partial nephrectomy: a systematic review with meta-analysis of comparative studies. Eur Urol Oncol, 2022, 5(6): 640-650. [Crossref]

5. Della Corte M, Quarà A, De Cillis S, Volpi G, Amparore D, Piramide F, et al. 3D virtual models and augmented reality for radical prostatectomy: a narrative review. Chin Clin Oncol, 2024, 13(4): 56-65. [Crossref]

6. Checcucci E, Veccia A, Puliatti S, De Backer P, Piazza P, Kowalewski KF, et al. Metaverse in surgery - origins and future potential. Nat Rev Urol, 2024. [Crossref]

7. Sica M, Piazzolla P, Amparore D, Verri P, De Cillis S, Piramide F, et al. 3D model artificial intelligence-guided automatic augmented reality images during robotic partial nephrectomy. Diagnostics, 2023, 13(22): 3454-3464. [Crossref]

8. Piana A, Amparore D, Sica M, Volpi G, Checcucci E, Piramide F, et al. Automatic 3D augmented-reality robot-assisted partial nephrectomy using machine learning: our pioneer experience. Cancers, 2024, 16(5): 1047-1058. [Crossref]